My friend Jess Clarke and I have done an article swap. You can check out her blog here: http://jessclarkeblogger.blogspot.co.uk

Why is there a high rate of CHD in the UK and why would there be a pattern of different areas in the UK having different rates of CHD deaths?

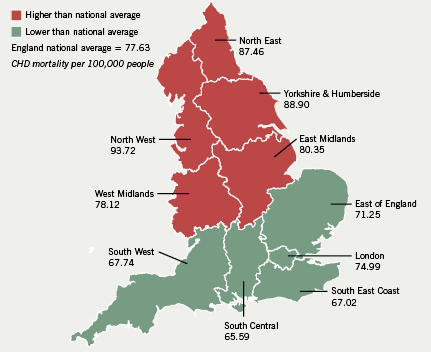

Coronary heart disease (CHD) is the term that describes what happens when your hearts blood supply is blocked or interrupted by a build up of fatty substances called atheroma in the coronary arteries. CHD is the most common cause of death in the UK. Each year there are 80,000 deaths from CHD. Different areas in the UK have different rates of CHD rates, for example the general trend seems to be that death rates from CHD are highest in Scotland and the North of England and lowest in the South of England.

There are many factors that increase the risk of CHD and may increase the rate of CHD in a particular country, such as age, prevalence of smoking and obesity.

Age can greatly affect the chances of getting CHD. The risk of CHD increases with age and as the UK has an ageing population it means that there is a higher risk of more of the population contracting CHD. In the UK 10 million people are over 65 years old and CHD is a serious concern among older adults because of the increased number of people living beyond 65 years, an age group with a high rate of CHD, and this age structure in the UK could explain why there are such high CHD rates. In the UK there are often communities and areas that have specific age groups living in them. For example there are areas that are very family orientated and so there will be many middle aged working people with children, and there are also areas that have a higher population of retired or older people. For example there are five retirement villages in Cheshire, which could explain why mortality from CHD rates are slightly higher in the north of England as older people are at a higher risk of contracting CHD and dying from a coronary event.

Smoking is another factor that can greatly increase the risk of CHD. The World Health Organization (WHO) research estimates that over 20% of cardiovascular disease is due to smoking and the risk of mortality from CHD is 60% higher in smokers. The prevalence of smoking in the UK declined to its lowest level in 2013 with 18.7% of people over 18 being smokers. However while this may seem like a low percentage it is still 10 million adults who smoke and the number of ex-smoker exceeds this number. Ex-smokers still have an increased risk of getting CHD for at least 15 years after stopping, so even though less people are currently smoking there are many people who are ex-smokers in the UK and this again could explain why the UK has a high rate of CHD. Not only does smoking increase the risk of CHD for the person actively smoking but also regular exposure to passive smoking increases CHD risk by up to 25-30%. Many people are regularly exposed to passive smoking in public and so may have an increased risk, but people living with smokers who experience passive smoking very frequently have an increased risk of death from CHD of 50-60%. This explains why the UK has high CHD rates, as the UK is a western country with a relatively high number of smokers, ex-smokers and also a large number of people exposed to passive smoking on a regular basis. This could also help explain why certain areas in the UK have higher mortality rates from CHD as people living in the countryside are less likely to be exposed to smoke on a regular basis, provided they are not, or are not living with, a smoker. Whereas in large busy cities it is more likely to be exposed to passive smoking frequently, therefore these urban areas may have higher CHD rates, and mortality from CHD rates.

Poor nutrition can also result in an increased risk of CHD. CHD risk is related to cholesterol levels, which can be increased by ingesting too many fats, in particular saturated fats, which block up blood vessels and cause CHD. Obesity is a major result of poor nutrition that greatly increases the risk of CHD. In the UK adult obesity rates have almost quadrupled in the last 25 years and nearly two-thirds of men and women in the UK are obese or overweight, according to new analysis data conducted by the Institute for Health Metrics and Evaluation. According to an American study women with a BMI in the category overweight had a 50% increase in risk of non-fatal or fatal coronary heart disease and men 72%. This shows that being overweight greatly increases the risk of CHD and the high numbers of obese in the UK could be increasing the rate of CHD in the UK.

Poor nutrition can also mean a lack of certain nutrients such as omega-3 fatty acids found in oily fish, which have been shown to reduce CHD mortality. For poorer people in the UK, living in poorer areas, fish rich in omega-3 fatty acids may be too expensive, particularly when there are much cheaper options (which are often more unhealthy and could contribute to the high obesity levels) and this could explain why certain areas have higher CHD mortality rates.

Exercise can majorly reduce the risk of CHD however there is an extreme lack of exercise being done in the UK, which contributes to obesity and high levels of CHD. A study examining the physical activity across England found that nearly 80% of the population fails to hit key national government targets, which include performing moderate exercise for 30 minutes at least 12 times a month. And according to Professor Carol Propper “the levels of physical activity is shockingly low” in the UK. So this lack of exercise across the UK could contribute to the high rates of CHD. However the amount of exercise differs around the UK, with one of Britain’s “laziest” areas being Sandwell in the West Midlands where, for example, only one in 20 gets on a bike in any month. Compared to this there are areas such as Wokingham in Berkshire where 82% of the residents exercise at least once in every month. This could explain why different areas of the UK have higher or lower mortality rates from CHD.

Ethnicity can also effect the risk of CHD as studies have shown that south Asian people living in the UK have a higher premature death rate from CHD, 46% higher for men and 51% higher for women. Hypotheses for this include migration, disadvantaged socio-economic status and ‘proatherogenic” diet. Migration in the UK is staying at record levels, with a net migration of 250,000 people. A percentage of these people will be from South Asia and so could be increasing the high rates of CHD in the UK. Also in certain areas in the UK different ethnicities often form communities, for example in Rugby there is a large number of Eastern Europeans living in the community. Different Ethnic communities within the UK could mean differing rates of CHD if certain ethnicities are more or less at risk of dying from CHD.

Rates of CHD are currently high in the UK due to a number of different factors and there are certain areas in the UK where these factors may be more or less common for example within different socio-economic groups resulting in a range of mortality rates from CHD within the UK.